Robots.txt

The term robots.txt refers to a file that is stored in the top directory level of a website to exclude web crawlers from search engines like Google, Yahoo, and Bing from certain areas. This means: When a bot or crawler encounters a domain, it will first read the robots.txt file in the root directory and thus recognize which other directories and subareas of the website should be crawled and which should not. Specific areas of individual pages can also be excluded, for example, advertisements. The file can be understood as a kind of instruction for bots, allowing them to read what they are allowed to do on a site on the web and what not.

Where should the robots.txt be placed?

The file should be located in the main directory of a website so that search engines can find it. It will not be found elsewhere. Additionally, there can only be one robots.txt file per main domain.

Structure of a robots.txt file

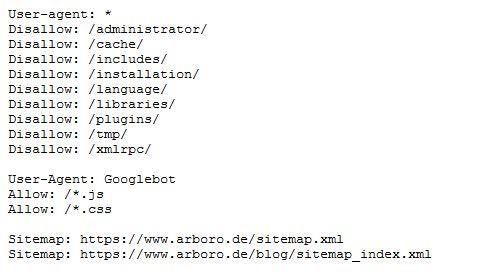

Such a file always consists of two parts. The first part addresses the user-agent. Then the command comes with the instruction "allow" or "disallow" along with the directory that should be excluded from or read by the bots.

The user-agent addresses the search engine bots. In this case, an asterisk (*) can be used to address all bots. However, you can also decide that, for example, the Google Bot is allowed to crawl a directory that the Bing Bot is not allowed to read. This is how the respective bots are addressed:

Search engine | Bot |

Googlebot | |

Bing | Bingbot |

Yahoo | Slurp |

MSN | Msnbot |

With the command allow: / you indicate to the bot that the directory can be crawled.

With disallow: / directories are excluded.

Example 1:

User-agent: Googlebot

Disallow: /Directory2/

This is how we tell the Google Crawler not to read directory 2.

Example 2:

User-agent: *

Allow: /

With this note, we tell all web crawlers in the robots.txt file that they can access all subdirectories without exception.

This exclusion appears important for subpages that are still under construction. Setting up a Robots.txt file is not a guarantee for the exclusion of the marked page. This is partly because the bot can reach a page via links and then index it, even though it was excluded from indexing in the domain's root directory. The consistent exclusion of web pages and subdirectories must be carried out using other methods such as HTTP authentication.

Even against attacks by third parties, one is not directly protected with a robots.txt file. For that, password-protected access should also be considered.

The sitemap can be stored in the robots.txt file to ensure that the crawler reaches it. This also helps with indexing subpages.

What is behind the Robots.txt file?

Behind Robots.txt is an independent community that developed the Robots Exclusion Standard in 1994. In 2008, some search engines like Google, Microsoft, and Yahoo agreed to standardize certain aspects of web crawling, making the Robots Exclusion Standard truly a standard today. In the text file, the instructions for the bot are marked by two sections separated by a colon. The term "User-agent" alerts the relevant bot (for example, from Google) to its task, which is described in the second line with an "allow" or "disallow" for the respective page area. Since the web crawler is usually allowed to index pages, the "disallow" attribute is mainly used.

How do you create such a file?

When creating a robots.txt file, a simple editor is usually used. Alternatively, there are online tools that can assist with the creation. It is important to pay attention to case sensitivity. Strictly speaking, robots.txt is always written in lowercase. Each bot must be instructed individually. This means instructions must be written for the Googlebot (web search), the bot for Google News and Shopping, and other search engine providers. Certain designations and commands help in implementing the robots.txt.